Dive Brief:

- A new model designed by researchers at the Massachusetts Institute of Technology (MIT) and Microsoft will help artificial intelligence (AI) systems identify and correct knowledge gaps. Fixing these "blind spots," the researchers say, will help driverless cars react more safely to unfamiliar situations.

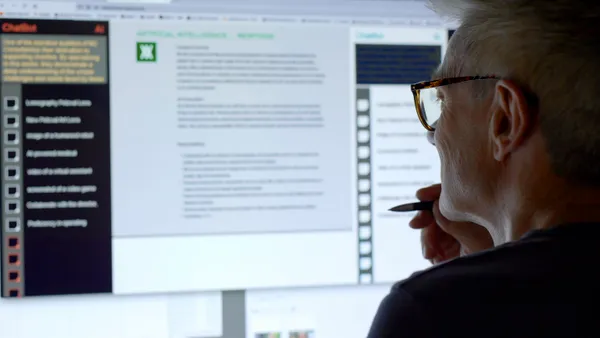

- The model works by having a human monitor an AI system’s actions and correct any mistakes; the feedback data creates a model that shows where a machine needs more information to work properly.

- It has so far only been used on a video game simulation, but will be incorporated into driverless car training. The model could even work in real time, by having a human driver take the wheel if an AI system is driving incorrectly, sending a signal that the action was incorrect.

Dive Insight:

For all of the real-world testing that’s taken place, driverless cars are still imperfect and can have trouble determining the correct move to make. For example, one of the “blind spots” that researchers highlighted was an inability to distinguish between a large white car and an ambulance, meaning a car may not know to pull over to the side. In tests, some cars have had trouble with snowy conditions or debris in the road.

The MIT and Microsoft model will help the AI system determine where its potential trouble spots are, even in situations where it is reacting safely 90% of the time. It then knows it can get guidance from a human driver in those situations, combining real-world experience with a learned policy.

“The idea is to use humans to bridge that gap between simulation and the real world, in a safe way, so we can reduce some of those errors," said MIT graduate student and first author Ramya Ramakrishnan in a statement.

The need for such an algorithm is echoed in the ongoing discussion about "ethics" for autonomous vehicles (AVs), and how to program the driverless systems to react to potentially dangerous situations. A 2017 study from The Institute of Cognitive Science at the University of Osnabrück determined that it was possible to program ethical decisions into an AV that would predict with up to 90% accuracy what a human would do. A separate MIT study found too many cultural differences to set a global standard, but left open the discussion on how to program the cars.